new

v1.5.0

This release integrates with GPT 4 Vision and supports more AI service

providers, including Local LLM Inference Server.

- New: Supports more AI service providers: Mistral AI, Perplexity and Together AI

- New: Supports custom OpenAI-compatible servers: OpenAI proxy, LocalAI and LM Studio Inference Server...

- New: Incorporates ShotSolve into PDF Pals: take a screenshot of your PDF and ask AI about it

- New: Switch to the new embedding model text-embedding-3: better & cheaper

- New: Added support for the new GPT 4 Turbo model (gpt-4-0125-preview)

- New: Allow searching within the current chat

- Fix: Fixed the bug where the Upgrade window keeps showing

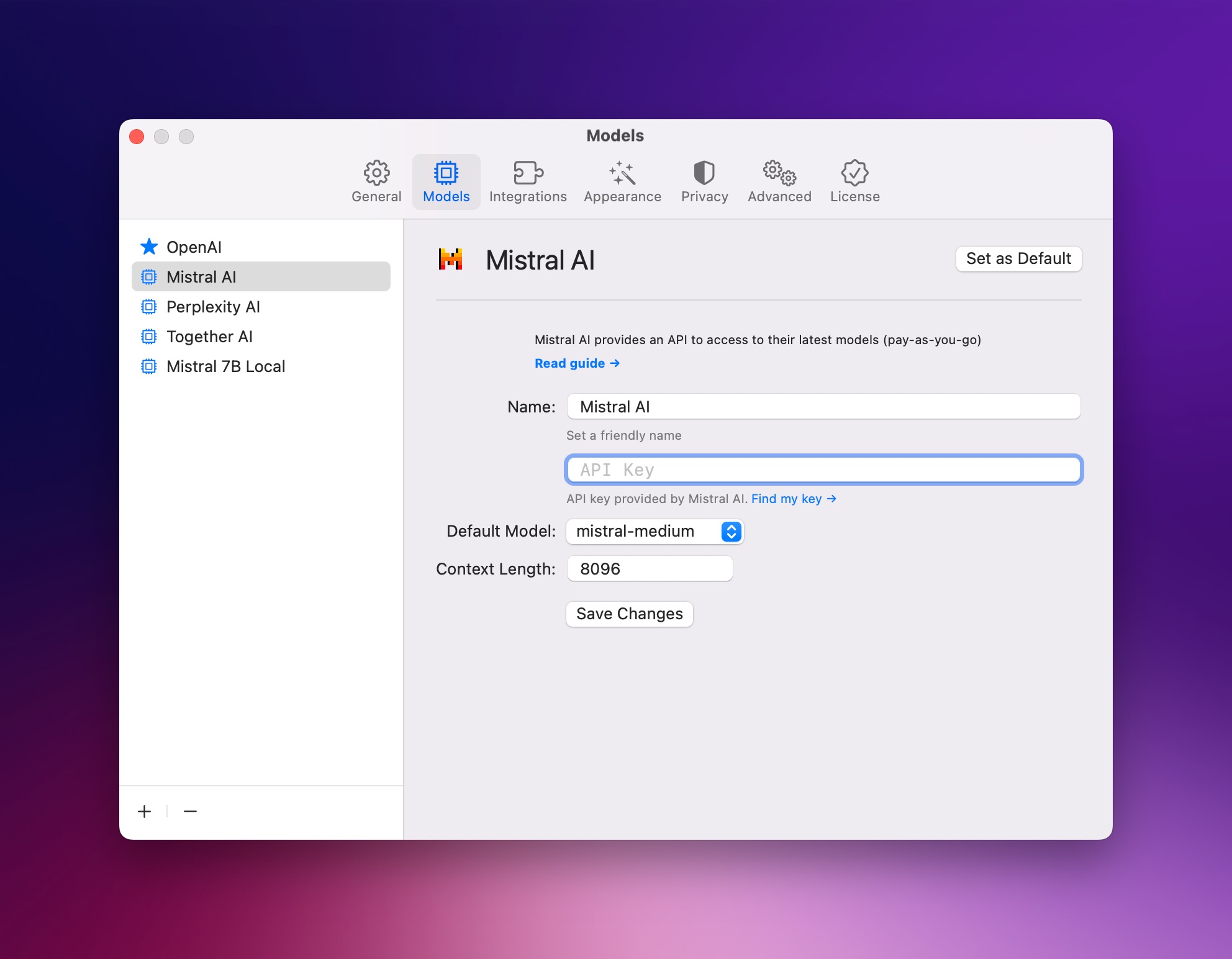

More AI service providers

In this version, I've added support for 3 new AI service providers: Mistral AI, Perplexity & Together AI.

Note that PDF Pals will always use OpenAI's embedding model or a local AI model to process your documents.

To set up, go to

Settings > Models

, click (+) button then fill the form. Pretty straightforward isn't it?

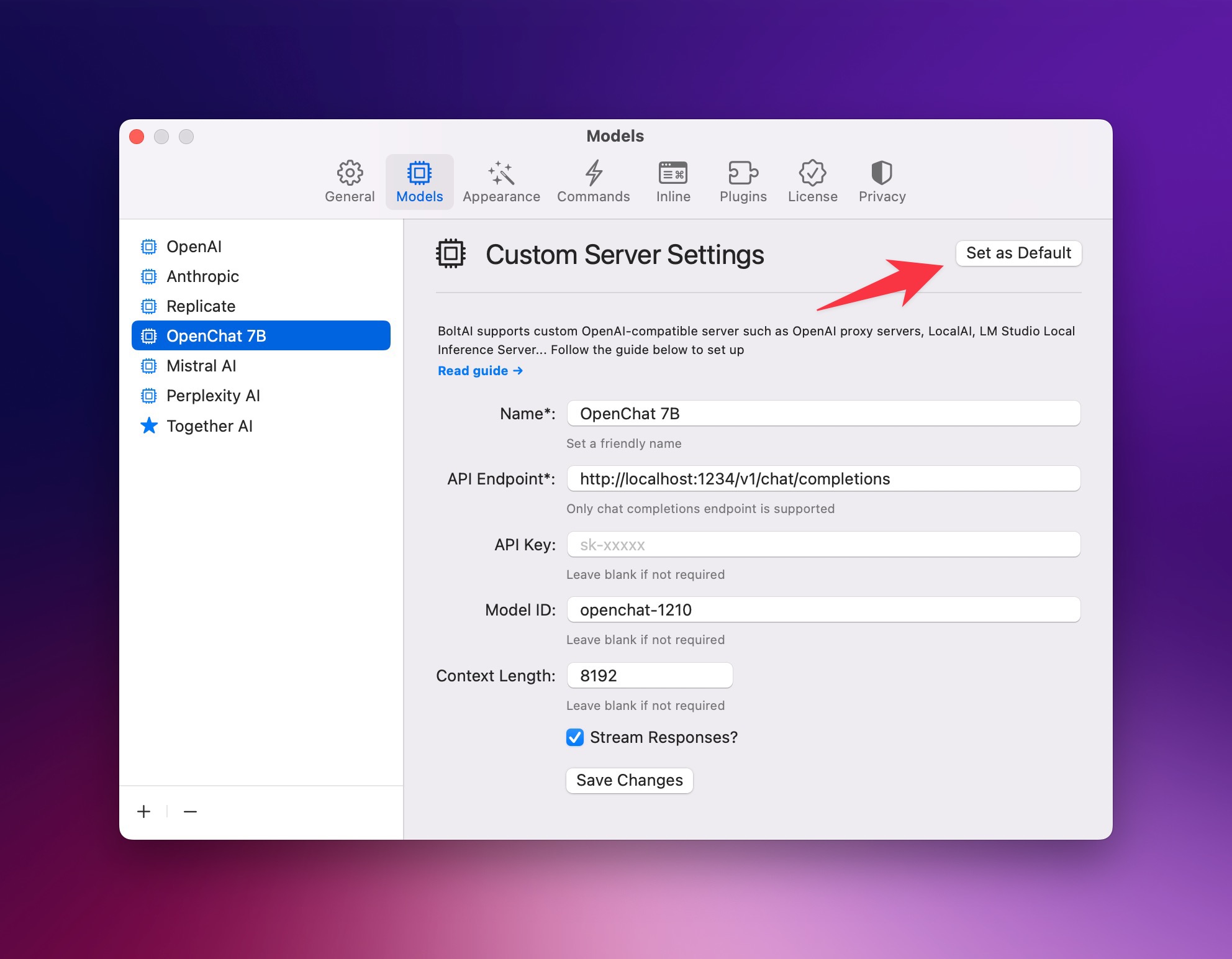

Use PDF Pals with Local Inference Servers

In this version, I also added the ability to use a custom OpenAI-compatible inference server. It's still in beta though, if you found an issue, please reach out. I would priotize this feature.

To start, follow this setup guide.

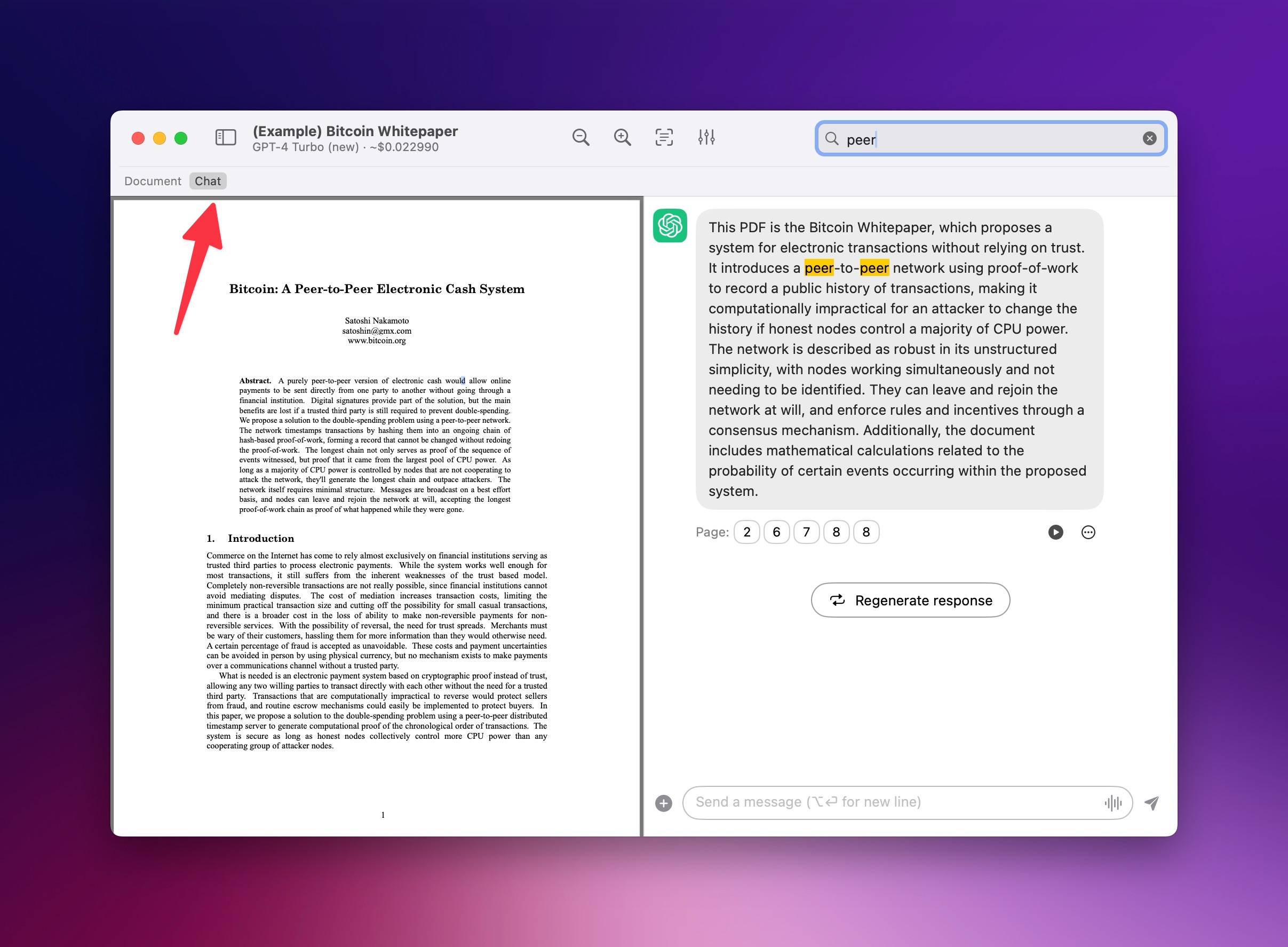

ShotSolve: easily chat with screenshots

Earlier this year, I started a new project called ShotSolve. It's a free app that allows you to take a screenshot and quickly ask GPT-4 Vision about it.

In this version, I've incorporated its features back into PDF Pals. You can now take a screenshot of the PDF quickly via the "Capture" toolbar button, then let GPT-4 Vision handle your request.

Note that it requires an OpenAI API key as this feature utilizes the GPT 4 Vision model.

Here is the demo video

Other improvements

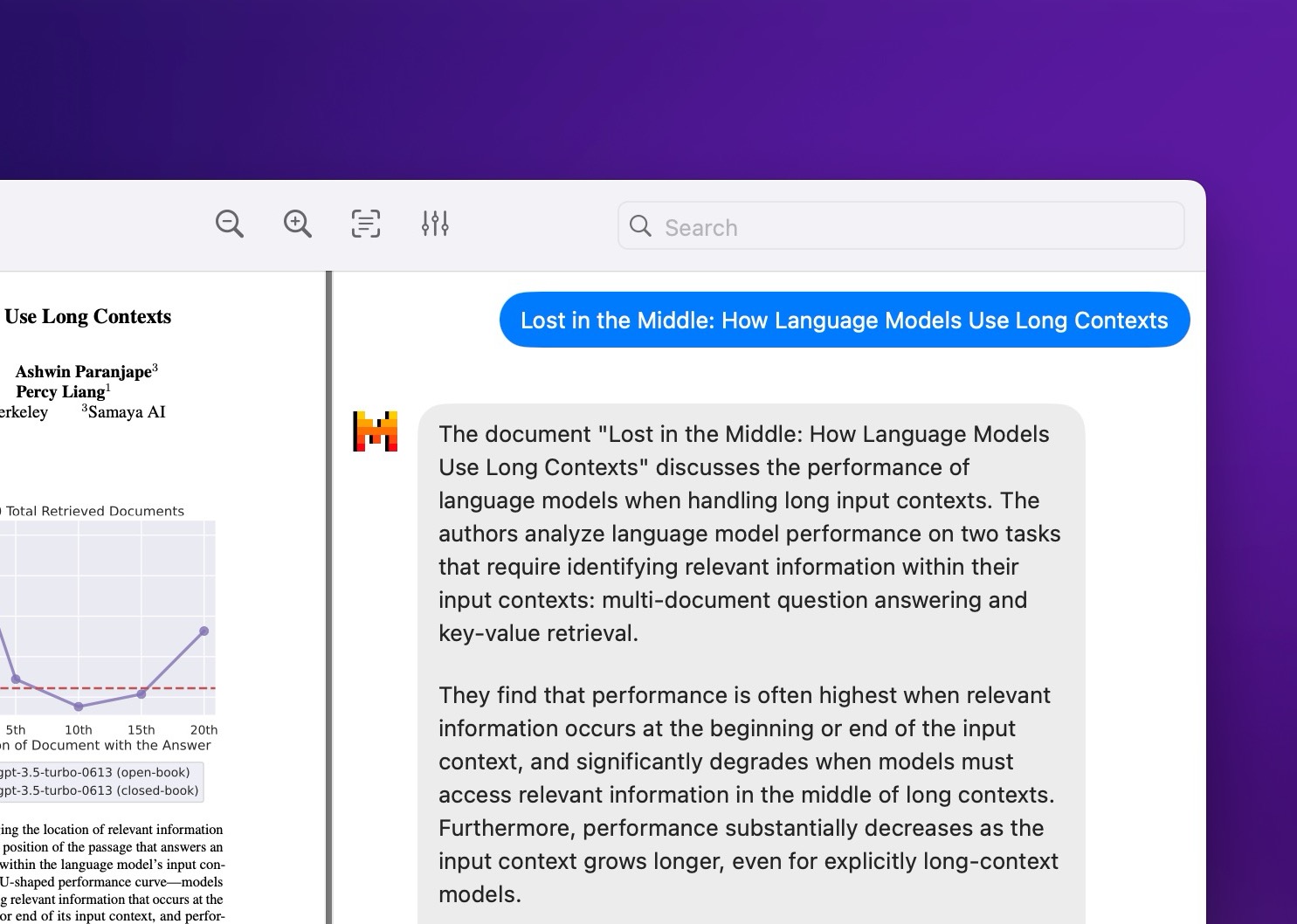

Search within conversation

I've added the ability to search within the current chat. You can manually switch the search scope (screenshot below), or to use the keyboard shortcut

Command + Shift + F

Cheaper and better document analysis

In this version, I've switched to the new embedding model from OpenAI (

text-embedding-3

). It's 5x cheaper than the previous model (text-embedding-ada

), which allows you to process a 63,000 pages document for just $1.Enjoy cheaper and better document analysis!

New GPT 4 Turbo model

I've added support for the improved GPT 4 Turbo model

gpt-4-0125-preview

. OpenAI fixed the issue with lazyness in this version. If you use PDF Pals to gain insights from programming ebooks, you should use to this model.Other minor bug fixes & improvements

And that's it

See you in the next update 👋

PS: If you enjoy PDF Pals, please <a href="https://love.pdfpals.com" target="_blank">share a testimonial</a>. Much appreciated 🙏